There is one extremely useful feature in JDeveloper 11g - Edit Definition for Data Control elements. I didn't noticed it before and same time I heard many requests for this functionality. I was happy when I discovered it.

Its often we need to navigate to View Object directly from ViewController layer, its especially painful in big projects. However, instead investigating application structure manually, developer can easily do it with JDeveloper wizard - just right click Data Control element and select Edit Definition option:

Corresponding View Object will be opened immediately:

Same functionality can be invoked from Data Control available in Page Definition file:

Enjoy !

Its my last post this year.

I wish you great 2010 and see you next year ! :-)

Thursday, December 31, 2009

Wednesday, December 30, 2009

Conditionally Required Fields in Oracle ADF Faces Rich Client

It happens to have functional requirement, when specific input field becomes required based on another field value. Sounds clear, however there is one trick you should know while implementing this requirement. First thing ADF developer will try, he will define AutoSubmit and PartialTriggers properties between some field and dependent conditionally required field. What happens during runtime, it is not working so straightforward. If we define PhoneNumber as dependent attribute from JobId, and implement attribute required condition to be true when JobId = AD_PRES:

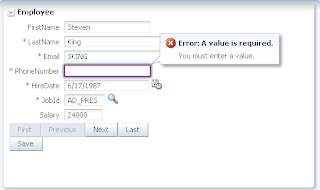

We will face problem in a case when PhoneNumber field will be empty. User will try to change JobId field value to something else, but he will be stuck because framework will report required field validation error:

This happens because there was defined PartialTrigger dependency between PhoneNumber and JobId. Any change in parent attribute triggers child attribute, where validation is invoked and failed for empty value.

Instead of defining PartialTrigger dependency directly between two fields, we should invoke it through partial trigger dependency in Backing Bean code. Let's define ValueChangeListener for parent field, JobId in my case:

Listener code is self explanatory, based on JobId value, we are setting PhoneNumber as required or not and then invoking partial target. Its a key difference comparing to PartialTrigger dependency, now we are changing dependent attribute required property first and only then triggering this attribute refresh. This allows to avoid validation error while changing condition for required property:

It works fine, but you shouldn't forget initial PhoneNumber correct rendering, there is already predefined expression for required option. This creates trouble, because we are trying to change it from value change listener, ADF_FACES-60058 exception is thrown while changing JobId:

Its because exists already predefined expression language for Required property:

Now we will apply old trick from ADF 10g, instead of using expression language, will calculate Required property value in Backing Bean method:

This method works in the same way as value change listener explained above, only one difference - it always returns false:

Main trick - you should define this method not directly on Required property itself, but on unrelated property, let's say - Readonly. This allows to avoid ADF_FACES-60058 described above. Its why we are returning always false, because field should be always editable.

On runtime, when JobId is not AD_PRES, PhoneNumber is not required:

If we navigate to such record, where JobId is AD_PRES, PhoneNumber will be automatically required:

Find employee with JobId not equal AD_PRES and change it to AD_PRES:

PhoneNumber field will become required:

Now try to change JobId again, you should be allowed to do the change:

PhoneNumber field will become not required:

We will face problem in a case when PhoneNumber field will be empty. User will try to change JobId field value to something else, but he will be stuck because framework will report required field validation error:

This happens because there was defined PartialTrigger dependency between PhoneNumber and JobId. Any change in parent attribute triggers child attribute, where validation is invoked and failed for empty value.

Instead of defining PartialTrigger dependency directly between two fields, we should invoke it through partial trigger dependency in Backing Bean code. Let's define ValueChangeListener for parent field, JobId in my case:

Listener code is self explanatory, based on JobId value, we are setting PhoneNumber as required or not and then invoking partial target. Its a key difference comparing to PartialTrigger dependency, now we are changing dependent attribute required property first and only then triggering this attribute refresh. This allows to avoid validation error while changing condition for required property:

It works fine, but you shouldn't forget initial PhoneNumber correct rendering, there is already predefined expression for required option. This creates trouble, because we are trying to change it from value change listener, ADF_FACES-60058 exception is thrown while changing JobId:

Its because exists already predefined expression language for Required property:

Now we will apply old trick from ADF 10g, instead of using expression language, will calculate Required property value in Backing Bean method:

This method works in the same way as value change listener explained above, only one difference - it always returns false:

Main trick - you should define this method not directly on Required property itself, but on unrelated property, let's say - Readonly. This allows to avoid ADF_FACES-60058 described above. Its why we are returning always false, because field should be always editable.

On runtime, when JobId is not AD_PRES, PhoneNumber is not required:

If we navigate to such record, where JobId is AD_PRES, PhoneNumber will be automatically required:

Find employee with JobId not equal AD_PRES and change it to AD_PRES:

PhoneNumber field will become required:

Now try to change JobId again, you should be allowed to do the change:

PhoneNumber field will become not required:

Download sample application - ConditionalMandatory.zip.

Sunday, December 27, 2009

Linux Libraries Prerequisites for WebCenter Suite 11g Installation

There is useful blog post from George Maggessy, about WebCenter Suite 11g installation - Installing WebCenter Suite 11g on Linux for Development. I was following outlined instructions and installed WebCenter 11g components successfully. However, I want to mention one more hint in addition to post above. This hint is about Linux libraries prerequisites for WebCenter 11g installation.

While installing WebCenter Suite 11g, during prerequisites check, three Linux libraries were missing in my case:

While installing WebCenter Suite 11g, during prerequisites check, three Linux libraries were missing in my case:

- sysstat-5.0.5-1.i386.rpm

- compat-libstdc++-296-2.96-132.7.2.i386.rpm

- compat-db-4.1.25-9.i386.rpm

As George mentions, you can use yum or apt-get to install those libraries, however you will need to have subscription to Linux support then. I'm not using support subscription for Oracle Enterprise Linux, so I did libraries update manually.

First, I downloaded missing libraries from RPM Search site. Just type there library name you are looking for, and you are ready to download. After you will get required libraries, switch to super user mode with su command in Linux shell and type rpm -ihv libraryname to install it.

When required libraries will be installed, WebCenter Suite 11g installation prerequisites check will pass without warnings.

Friday, December 25, 2009

Installing VMware Tools on Oracle Enterprise Linux

I was installing Oracle Fusion Middleware 11g on Oracle Enterprise Linux 5. Before installing Oracle Fusion Middleware 11g, I wanted to prepare VMware image properly. I'm running Mac OS and using VMware Fusion for Linux virtualization. After I installed Linux into VMware image, I started VMware Tools installation for guest OS. You need to run VMware Tools in order to improve display properties and other guest OS runtime characteristics. Actually, it was my first time installing VMware Tools on Linux guest and I faced one small issue I want to share.

After invoking Install VMware Tools option from VMware menu:

You will see VMware Tools image will be opened inside Linux:

I was extracting installation archive directly inside VMware Tools image and it was my mistake - when you want to run VMware Tools installation, you should copy installation package inside guest OS folder structure (for example tmp folder) and run it from there.

Wednesday, December 23, 2009

View Criteria Inside ADF Library

I got a question from blog reader, if its possible to include VO with View Criteria into ADF Library and later reuse this View Criteria from another ADF BC project. Reader was facing problems, so as usual I developed sample working application and now posting it here.

Download working sample - ViewCriteriaReuse.zip. This application implements two ADF BC projects - ModelCommon and Model. Inside ModelCommon I created LOV VO for Departments and defined View Criteria to filter based on LocationId attribute:

View Criteria definition:

View Object from ModelCommon is imported into Model project, DepartmentsLovView in this case:

Now we came to that moment, where reader had problems. While defining LOV on DepartmentId attribute, he wasn't able to set View Accessor from shared VO. However, it should work fine, like it works here:

On runtime LOV is filtered accordingly:

Download working sample - ViewCriteriaReuse.zip. This application implements two ADF BC projects - ModelCommon and Model. Inside ModelCommon I created LOV VO for Departments and defined View Criteria to filter based on LocationId attribute:

View Criteria definition:

View Object from ModelCommon is imported into Model project, DepartmentsLovView in this case:

Now we came to that moment, where reader had problems. While defining LOV on DepartmentId attribute, he wasn't able to set View Accessor from shared VO. However, it should work fine, like it works here:

On runtime LOV is filtered accordingly:

Database Connection in ADF BC 11g

My colleague was facing ADF BC development question and asked me to help. He sent me whole application, so I was able to open it directly with my local JDeveloper 11g. While investigating actual question, I noticed strange behavior in ADF BC. For example, attribute types were not recognized and I was getting log messages - oracle.jbo.domain.Number not found.

I started to dig more and I saw that Number type is displayed as oracle.jbo.domain.Number, usually it is shown just as Number:

I opened attribute editor, and I saw that type list gives only small set of types, Number type is not available:

Thats was strange and I was a bit frustrated. I realized that database connection is not defined and it might be a reason for this strange problem:

Database connection wasn't present because my colleague was using IDE level connection. I defined HrDS connection locally:

After IDE restart, it started to work properly - Number type was recognized again:

And types list was showing all available types:

This means, missing database connection definition was causing direct impact on ADF BC development. If you will face same issue, make sure following files are present inside your application - .adf/META-INF/connections.xml, src/META-INF/cwallet.sso and src/META-INF/jps-config.xml.

Download sample application with database connection files in place - DatabaseConnectionADFBC.zip.

By the way, original question received from my colleague was solved as well ;-)

I started to dig more and I saw that Number type is displayed as oracle.jbo.domain.Number, usually it is shown just as Number:

I opened attribute editor, and I saw that type list gives only small set of types, Number type is not available:

Thats was strange and I was a bit frustrated. I realized that database connection is not defined and it might be a reason for this strange problem:

Database connection wasn't present because my colleague was using IDE level connection. I defined HrDS connection locally:

After IDE restart, it started to work properly - Number type was recognized again:

And types list was showing all available types:

This means, missing database connection definition was causing direct impact on ADF BC development. If you will face same issue, make sure following files are present inside your application - .adf/META-INF/connections.xml, src/META-INF/cwallet.sso and src/META-INF/jps-config.xml.

Download sample application with database connection files in place - DatabaseConnectionADFBC.zip.

By the way, original question received from my colleague was solved as well ;-)

Tuesday, December 22, 2009

How to do Manual Deployment for ADF 11g Application EAR with Auto Generated JDBC Descriptors

Sometimes it can happen you will face a requirement to deploy previously generated EAR on another server. In my specific case we were deploying on WebLogic server directly from JDeveloper 11g, with enabled auto generation for JDBC descriptors - see my previous post. For some specific reasons, now we need to redeploy same EAR on another server, however we dont have access anymore to development environment. What we did, we took previously deployed EAR from ORACLE_HOME/user_projects/domains/domain_name/servers/AdminServer/upload folder and tried to deploy it manually. Of course we got error, because EAR was generated with JDBC descriptor auto generation enabled. We got error, same as described in my blog post mentioned above - No credential mapper entry found for password indirection user=hr data source HrDS:

This error is logical, what we need to do is to modify deployment plan and to remove JDBC descriptor declaration. When you deploy, you can do this through Oracle Enterprise Manager 11g, it offers option to edit Deployment Plan just before actual deployment. Its possible to open Deployment Plan through Oracle Enterprise Manager 11g, and it allows to delete JDBC descriptor:

Bad news - its not removing it, even after pressing Apply. Delete action is not working and deployment Plan remains the same. Since it remains the same, deployment fails the same error mentioned above.

In seems no choice and we need to apply good old manual approach. Extract EAR archive you want to deploy, and go to META-INF folder. Remove (back-up) JDBC configuration file (HrDS-jdbc.xml in my case) and open weblogic-application.xml file for editing:

From weblogic-application.xml file remove entry related to JDBC descriptor:

Now pack your EAR back, you can simply Zip it and rename file extension to ear. With modified descriptors, Deployment Plan will updated automatically and deployment should be successful:

Just make sure you have JDBC Data Source defined on WLS. Application will use Data Source that should be present on WebLogic server, it will not be autogenerated. Also you should check if your deployment target is listed in JDBC Data Source targets as well:

Running application:

This error is logical, what we need to do is to modify deployment plan and to remove JDBC descriptor declaration. When you deploy, you can do this through Oracle Enterprise Manager 11g, it offers option to edit Deployment Plan just before actual deployment. Its possible to open Deployment Plan through Oracle Enterprise Manager 11g, and it allows to delete JDBC descriptor:

Bad news - its not removing it, even after pressing Apply. Delete action is not working and deployment Plan remains the same. Since it remains the same, deployment fails the same error mentioned above.

In seems no choice and we need to apply good old manual approach. Extract EAR archive you want to deploy, and go to META-INF folder. Remove (back-up) JDBC configuration file (HrDS-jdbc.xml in my case) and open weblogic-application.xml file for editing:

From weblogic-application.xml file remove entry related to JDBC descriptor:

Now pack your EAR back, you can simply Zip it and rename file extension to ear. With modified descriptors, Deployment Plan will updated automatically and deployment should be successful:

Just make sure you have JDBC Data Source defined on WLS. Application will use Data Source that should be present on WebLogic server, it will not be autogenerated. Also you should check if your deployment target is listed in JDBC Data Source targets as well:

Running application:

Sunday, December 20, 2009

Producing JSR 168 Portlets Directly From ADF Task Flows with Oracle WebCenter 11g

While working with different customers, I'm getting questions about how to expose ADF Task Flows to third party Portals. Its especially important for those companies who are developing their own products based on Oracle Fusion Middleware 11g. You need to ensure that your product will be pluggable and compatible with different environment. Just imagine, in Oracle ADF 11g with WebCenter 11g you can expose your ADF Task Flows through portlet bridge as standard JSR 168 portlets and plug them into different Portals.

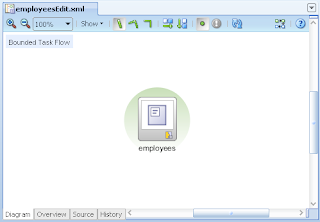

I continue series of posts related to integration area with new sample application - ADFIntegration7.zip. I will describe how you can expose ADF Task Flow as portlet, and then how to consume this portlet from WebCenter 11g application. Developed sample contains one ADF Task Flow with region:

I will define portlet bridge for this ADF Task Flow, and will expose available fragment:

First Part: Exposing ADF Task Flow as portlet

Its enough to right click ADF Task Flow and select Create Portlet Entry option:

Complete wizard steps, and you are done - portlet bridge is defined. Now define WAR deployment profile, we will use it to deploy portlet to WebLogic server:

For test purpose, I will use JDeveloper 11g embedded WebLogic server instance, you can start it directly from JDeveloper 11g:

Right click ViewController project and select - Deploy:

While deployment process running, you will be asked to proceed with JSR 168 portlet deployment:

From deployment log you will see application URL:

Open this URL in your browser, you can test WSDL URL from there:

Second Part: Consuming portlet in WebCenter 11g application

In Resource Palette define new WSRP Producer Connection:

You will need to specify portlet WSDL URL:

Portlet should become visible in Resource Palette, you can drag and drop it from there directly on your page, same as any other component:

Let's drag and drop it on JSPX page available in another application:

On runtime, Employees Editing portlet will be loaded, and user will see it same as it would be usual ADF application. In this case both - Query Criteria and results table are coming through consumed portlet:

I continue series of posts related to integration area with new sample application - ADFIntegration7.zip. I will describe how you can expose ADF Task Flow as portlet, and then how to consume this portlet from WebCenter 11g application. Developed sample contains one ADF Task Flow with region:

I will define portlet bridge for this ADF Task Flow, and will expose available fragment:

First Part: Exposing ADF Task Flow as portlet

Its enough to right click ADF Task Flow and select Create Portlet Entry option:

Complete wizard steps, and you are done - portlet bridge is defined. Now define WAR deployment profile, we will use it to deploy portlet to WebLogic server:

For test purpose, I will use JDeveloper 11g embedded WebLogic server instance, you can start it directly from JDeveloper 11g:

Right click ViewController project and select - Deploy:

While deployment process running, you will be asked to proceed with JSR 168 portlet deployment:

From deployment log you will see application URL:

Open this URL in your browser, you can test WSDL URL from there:

Second Part: Consuming portlet in WebCenter 11g application

In Resource Palette define new WSRP Producer Connection:

You will need to specify portlet WSDL URL:

Portlet should become visible in Resource Palette, you can drag and drop it from there directly on your page, same as any other component:

Let's drag and drop it on JSPX page available in another application:

On runtime, Employees Editing portlet will be loaded, and user will see it same as it would be usual ADF application. In this case both - Query Criteria and results table are coming through consumed portlet: