When you build machine learning model, first start with the data - make sure input data is prepared well and it represents true state of what you want machine learning model to learn. Data preparation task takes time, but don't hurry - quality data is a key for machine learning success. In this post I will go through essential steps required to bring data into right shape to feed it into machine learning algorithm.

Sample dataset and Python notebook for this post can be downloaded from my

GitHub repo.

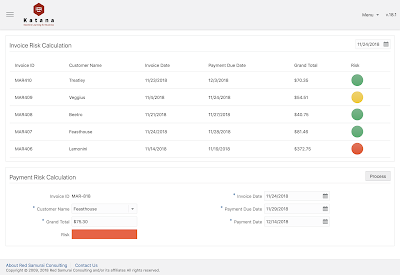

Each row from dataset represents invoice which was sent to customer. Original dataset extracted from ERP system comes with five columns:

customer - customer ID

invoice_date - date when invoice was created

payment_due_date - expected invoice payment date

payment_date - actual invoice payment date

grand_total - invoice total

invoice_risk_decision - 0/1 value column which describe current invoice risk. Goal of machine learning module will be to identify risk for future invoices, based on risk estimated for historical invoice data.

There are two types of features - categorical and continuous:

categorical - often text than number, something that represents distinct groups/types

continuous - numbers

Machine learning typically works with numbers. This means we need to transform all categorical features into continuous. For example,

grand_total is continuous feature, but dates and customer ID are not.

Date can be converted to continuous feature by breaking it into multiple columns. Here is example of breaking

invoice_date into multiple continuous features (year, quarter, month, week, day of year, day of month, day of week):

Using this approach all date columns can be transformed into continuous features. Customer ID column can be converted into matrix of 0/1. Each unique text value is moved into separate column and assigned with 1, all other column in that row are assigned with 0. This transformation can be done with Python library called Pandas, we will see it later.

You may or may not have decision values for your data, this depends how data was collected and what process was implemented in ERP app to collect this data. Decision column (

invoice_risk_decision) value represents business rule we want to calculate with machine learning. See 0/1 assigned to this column:

Rule description:

0 - invoice was payed on time, payment_date less or equal payment_due_date

0 - invoice wasn't payed on time, but total is less than all invoices total average and payment delay is less or equal 10% for current customer average

1 - all other cases, indicates high invoice payment risk

I would recommend to save data in CSV format. Once data is prepared, we can load it in Python notebook:

I'm using Pandas library (imported through

pd variable) to load data from file into data frame. Function head() prints first five rows from data frame (dataset size 5x24):

We can show number of rows with 0/1, this helps to understand how data set is constructed - we see that more than half rows represent invoices without payment risk:

Customer ID column is not a number, we need to convert it. Will be using Pandas

get_dummies function for this task. It will turn every unique value into a column and place 0 or 1 depending on whether the row contains the value or not (this will increase dataset width):

Original customer column is gone, now we have multiple columns for each customer. If customer with ID = 4 is located it given row, 1 is set:

Finally we can check correlation between decision column -

invoice_risk_decision and other columns from dataset. Correlation shows which columns will be used by machine learning algorithm to predict a value based on the values in other columns in the dataset. Here is correlation for our dataset (all columns with more than 10% correlation):

As you can see, all date columns have high correlation as well as

grand_total. Our rule tells that invoice payment risk is low, if invoice amount is less than all total average - thats why correlation on

grand_total value exist.

Customer with ID = 11 is the one with largest number of invoices, correlation for this customer is higher than for others, as expected.